An LLM benchmark for Svelte 5 based on the OpenAI methodology from OpenAIs paper "Evaluating Large Language Models Trained on Code".

SvelteBench is an innovative benchmarking tool designed for evaluating large language models (LLMs) in generating Svelte components. Inspired by the OpenAI methodology laid out in the paper “Evaluating Large Language Models Trained on Code,” SvelteBench utilizes a structured approach akin to the HumanEval dataset. This tool is particularly useful for developers who want to assess the effectiveness and accuracy of the Svelte components produced by different LLM providers through comprehensive testing against predefined criteria.

With its automated testing framework, SvelteBench allows users to streamline the process of generating and validating Svelte components. By simply sending prompts to various LLMs, the tool checks for functionality and correctness through rigorous testing, making it an essential resource for developers looking to enhance their productivity and ensure the quality of their code.

Multi-Provider Support: SvelteBench supports a wide range of LLM providers including OpenAI, Anthropic, Google, and OpenRouter, ensuring versatility in testing.

Debug Mode: For streamlined development, debug mode allows testing of a single provider or model, significantly speeding up the process during the development phase.

Contextual Generation: Users can provide a context file, such as Svelte documentation, to improve the quality of component generation, which aids LLMs in producing more accurate results.

Custom Test Addition: Developers can easily add new tests by creating designated directories and files, allowing for tailored evaluation based on specific project needs.

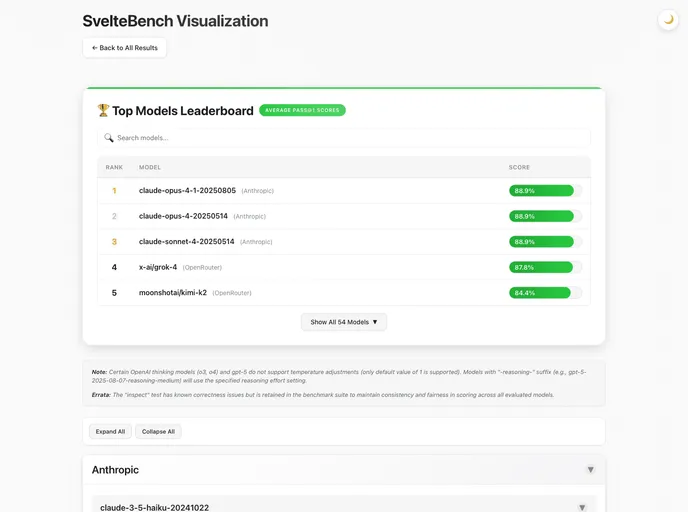

Result Visualization: After executing benchmarks, results can be visualized using a built-in tool, making it easier to understand performance metrics directly within the generated output.

Timestamped Results Storage: Benchmark results are saved in a structured JSON format, with filenames that include timestamps for easy identification and organization of test results.